Hadoop HBase Serialization and DeSerialization Tutorial

Hadoop HBase Serialization and DeSerialization Tutorial, are you looking for the information of Serialization and DeSerialization in hadoop? Or the one who is casually glancing for the best platform which is providing bucketing in hive with examples for beginners? Then you’ve landed on the Right Platform which is packed with tons of Tutorials of bucketing in Hadoop hive. Follow the below mentioned Bucketing in Hive tutorial for Beginners which were originally designed by the world-class Trainers of Hadoop Training institute Professionals.

If you are the one who is a hunger to become the certified Pro Hadoop Developer? Or the one who is looking for the best Hadoop Training institute in Bangalore which offering advanced tutorials and Hadoop certification course to all the tech enthusiasts who are eager to learn the technology from starting Level to Advanced Level.

HBase Configuration Serialization

Add these libraries to your java project build path.

avro-1.8.1.jar

avro-tools-1.8.1.jar

log4j-api-2.0-beta9.jar

log4j-core-2.0-beta9.jar

Step 1 – Change the directory to /home/cloudera/Desktop/Avro

$ cd /home/cloudera/Desktop/Avro

Step 2 – Make a new directory schema in /home/cloudera/Desktop/Avro

$ mkdir /home/hduser/Desktop/AVRO/schema

Step 3 – Change the directory to /home/cloudera/Desktop/Avro/Schema

$ cd /home/cloudera/Desktop/Avro/Schema

Step 4 – Create a new avro schema Prwatech.avsc in /home/cloudera/Desktop/Avro/schema. It creates a new Prwatech.avsc file if it doesn’t exists and opens for editing.

$ gedit Prwatech.avsc

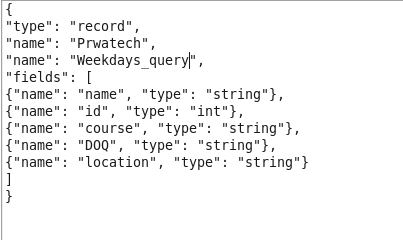

Step 5 – Add these following lines to Prwatech.avsc file. Save and close it.

>> Open eclipse and write following program:

package com.test.avro;

import java.io.File;

import java.io.IOException;

import org.apache.avro.Schema;

import org.apache.avro.file.DataFileWriter;

import org.apache.avro.generic.GenericData;

import org.apache.avro.generic.GenericDatumWriter;

import org.apache.avro.generic.GenericRecord;

import org.apache.avro.io.DatumWriter;

public class SerializeNew {

public static void main(String args[]) throws IOException {

// Instantiating the Schema.Parser class.

Schema schema = new Schema.Parser().parse(new File(

“/home/cloudera/Desktop/Avro/Schema/Prwatech.avsc”));

// Instantiating the GenericRecord class.

GenericRecord e1 = new GenericData.Record(schema);

// Insert data according to schema

e1.put(“name”, “Ramman”);

e1.put(“id”, 001);

e1.put(“course”, “Hadoop”);

e1.put(“DOQ”, “Feb”);

e1.put(“location”, “Bangalore”);

GenericRecord e2 = new GenericData.Record(schema);

e2.put(“name”, “Akash”);

e2.put(“id”, 002);

e2.put(“course”, “Python”);

e2.put(“DOQ”, “March”);

e2.put(“location”, “Pune”);

DatumWriter<GenericRecord> datumWriter = new GenericDatumWriter<GenericRecord>( schema);

DataFileWriter<GenericRecord> dataFileWriter = new DataFileWriter<GenericRecord>( datumWriter);

dataFileWriter.create(schema, new File( “/home/cloudera/Desktop/Avro/data.txt”));

dataFileWriter.append(e1);

dataFileWriter.append(e2);

dataFileWriter.close();

System.out.println(“data successfully serialized”);

}

}

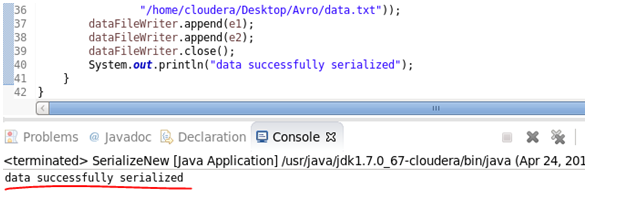

Now compile the program

Output will be

HBase Configuration DeSerialization

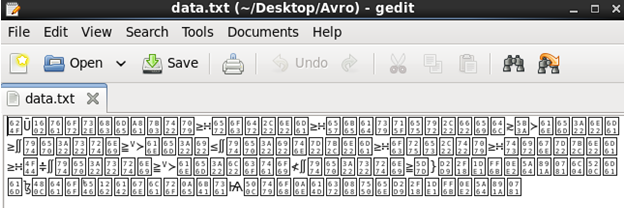

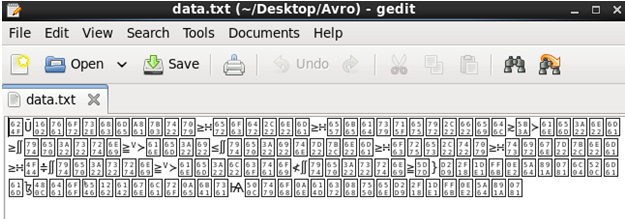

Input Data will be

Run following command in eclipse within same package (but create different class)

package com.test.avro;

import java.io.File;

import org.apache.avro.Schema;

import org.apache.avro.file.DataFileReader;

import org.apache.avro.generic.GenericDatumReader;

import org.apache.avro.generic.GenericRecord;

import org.apache.avro.io.DatumReader;

public class DeserializeNew {

public static void main(String args[]) throws Exception {

// Instantiating the Schema.Parser class.

Schema schema = new Schema.Parser().parse(new File(

/home/cloudera/Desktop/Avro/Schema/Prwatech.avsc”));

DatumReader<GenericRecord> datumReader = new GenericDatumReader<GenericRecord>(schema);

DataFileReader<GenericRecord> dataFileReader = new DataFileReader<GenericRecord>( new File(“/home/cloudera/Desktop/Avro/data.txt”),datumReader);

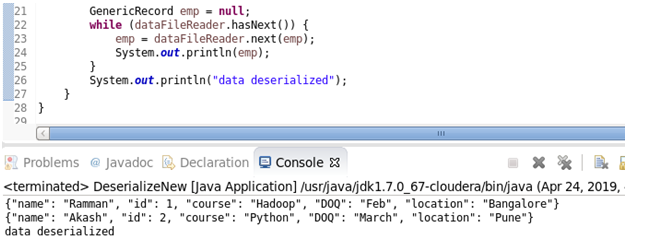

GenericRecord emp = null;

while (dataFileReader.hasNext()) {

emp = dataFileReader.next(emp);

System.out.println(emp);

}

System.out.println(“data deserialized”);

}

}

After DeSerialization the output will be

Get success in your career as a Hadoop developer by being a part of the Prwatech, India’s leading Hadoop training institute in Bangalore.