Reinforcement Learning Tutorial for Beginners

Reinforcement Learning Tutorial for Beginners, in this Tutorial one, can learn about framing reinforcement learning. Are you the one who is looking for the best platform which provides information about what is reinforcement learning, an example of Reinforcement Learning Tutorial for Beginners, the difference between supervised and reinforcement learning, Unsupervised vs Reinforcement Learning, Practical applications of Reinforcement Learning? Or the one who is looking forward to taking the advanced Data Science Certification Course with Machine Learning from India’s Leading Data Science Training institute? Then you’ve landed on the Right Path. The Below mentioned Reinforcement Learning Tutorial for Beginners will help to Understand the detailed information about the difference between supervised and reinforcement learning, Unsupervised vs Reinforcement Learning, so Just follow all the tutorials of Prwatech, India’s Leading Data Science Training institute in Bangalore and Be a Pro Machine Learning Developer.What is Reinforcement Learning?

Reinforcement Learning is rising rapidly, creating a wide variety of learning algorithms for various applications. It is one of the vital branches of artificial intelligence. In Reinforcement learning machines and software agents mechanically control the ideal behavior within a specific background, in order to enhance their performance. It is a reward-based technique which takes a suitable action to maximize reward in a specific situation. Various software and machines employ this to find the best possible behavior or path it should take in a particular background or particular situation.Framing Reinforcement Learning

Basically, reinforcement learning is based on what to do and how to map circumstances to actions. The target is to maximize the reward value (which is a mostly numerical value in the case of machine learning). The learner must discover which kind of action is to be taken instead of guided by some trainer. Let’s consider an example of the kids learning cycle.

The general steps involved while a child learning cycling:

The first thing the child will detect is to notice how you are cycling. Grasping this concept, the child attempts to copy paddling action.

But before that, it is necessary to sit on cycle and balance yourself. So he tries for the same to balance himself. While doing that he struggles, slips, but still tries to attempt the paddling stage.

Now as he learns to balance, he has a new challenge to manage the paddling and breaking according to situations. He has to control his balance while paddling and braking. He tries to learn it.

Now the real task for the child is cycling on the actual road. But it may not be easy that much like on a smooth road used for practice. There are many more things to keep in mind, like balancing the weight of the body, paddling, maintaining speed, braking action in case of obstacles, controlling the speed of the cycle, etc.

Basically, reinforcement learning is based on what to do and how to map circumstances to actions. The target is to maximize the reward value (which is a mostly numerical value in the case of machine learning). The learner must discover which kind of action is to be taken instead of guided by some trainer. Let’s consider an example of the kids learning cycle.

The general steps involved while a child learning cycling:

The first thing the child will detect is to notice how you are cycling. Grasping this concept, the child attempts to copy paddling action.

But before that, it is necessary to sit on cycle and balance yourself. So he tries for the same to balance himself. While doing that he struggles, slips, but still tries to attempt the paddling stage.

Now as he learns to balance, he has a new challenge to manage the paddling and breaking according to situations. He has to control his balance while paddling and braking. He tries to learn it.

Now the real task for the child is cycling on the actual road. But it may not be easy that much like on a smooth road used for practice. There are many more things to keep in mind, like balancing the weight of the body, paddling, maintaining speed, braking action in case of obstacles, controlling the speed of the cycle, etc.

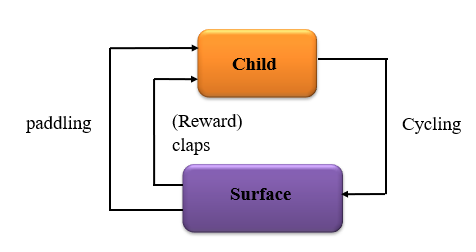

Example of Reinforcement Learning

Let’s frame the above example. For this example, the problem statement will be to cycle. A child is an agent trying to operate the environment which is the surface on which it tries to cycle by taking actions like paddling and breaking. That child tries to change the states according to the situations he is facing. The child gets a reward like let’s say claps from his parents. when he falls while cycling or taken some wrong step, he will not receive any clap, which is a kind of negative reward. And now he is not able to cycle. This is what we summarize in case of a reinforcement learning problem. The above example of child learning cycling can be represented in form of reinforcement learning as:

The above example of child learning cycling can be represented in form of reinforcement learning as:

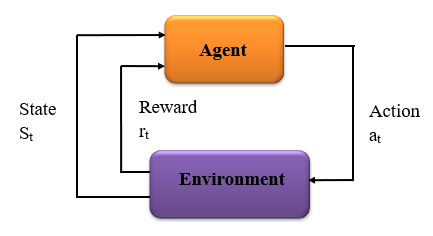

So, the overall reinforcement learning process can be stated as follows:

The RL agent collects state S0 from the environment. Based on state S0, the RL agent takes an action A0 , and gets positive or negative rewards.

So, the overall reinforcement learning process can be stated as follows:

The RL agent collects state S0 from the environment. Based on state S0, the RL agent takes an action A0 , and gets positive or negative rewards.

Difference Between Supervised and Reinforcement Learning

In the case of supervised learning, an external supervisor is present with knowledge of the environment. It shares it with the agent to complete the task. But there are some cases and different environments in which different combinations of tasks are to be performed by the agent to achieve the target. In that situation allotting a supervisor for an agent is almost unreasonable, as the agent has to learn itself. For example, while playing chess there is a number of possible moves. For chess, creating a database for different moves is an impractical task. It is more suitable for an agent to learn from their own experiences and create a knowledge base from them. There is a mapping between input and output, in case of both supervised and reinforcement learning. But in reinforcement learning, there is feedback known as reward function for making agent eligible to learn by itselfUnsupervised vs Reinforcement Learning:

As discussed above, reinforcement learning holds some mapping between input and output which is absent in unsupervised learning. In unsupervised learning, the main task is to find the fundamental patterns instead of the mapping. For example, if the task is to recommend a product to a user, an unsupervised learning algorithm will look at similar products that the person has previously bought with a specific brand, etc. and suggest anyone from them. Where reinforcement learning algorithm will get constant relevant feedback from the user by suggesting a few similar products and then algorithm tries to build a knowledge base of products and some related brands that the person will like.Exploration vs exploitation dilemma:

Let’s consider a row of slot machines in a casino. Each machine holds its own winning probability. Now as a player you want to make as much money as possible. In this case, the dilemma is, how do you find which of the machine holds the best odds, with maximizing your profit? The first naive approach may be to select only one slot machine and keep pulling the lever all the time. It may give you some kind of charges. In this situation, still we operate one slot the whole day, the chances of losing money are more. This is what an approach which is a pure exploitation approach. Let’s see another approach. You can pull a lever of each & every slot machine, waiting to hit the jackpot. This will give you sub-optimal charges. This is another naive approach which is called the pure exploration approach. So the exploration is about using the already known exploited information to heighten the reward.Overall, we have to find a proper balance between these approaches to get a maximum reward, as both are not superlative. This is nothing but an exploration vs. exploitation dilemma of reinforcement learning.

Terms in Reinforcement Learning:

Agent: It is the Reinforcement Learning algorithm which learns from trial and error Environment: The world through which the agent moves. Action: All possible steps that the agent can take State: It is the current condition Reward: An instant return from the environment to appraise the last action. Policy: The approach that the agent uses to determine the next action based on the current state.Markov Decision Process(MDP)

The mathematical outline for mapping a solution in the reinforcement learning criterion is called the Markov Decision Process. To attain the solution, we need the following components: Set of actions (A) Set of states, (S) Reward (R) Policy (π) Value (V) Here to transition from our start state, we have to take an action (A) up to the end state (S). We get rewards (R) as returns or feedback, for each action we take. Our actions can return a positive reward or negative reward. The policy (π) is defined based on a set of actions we followed and the rewards we get in return define our value (V). Our task is to maximize rewards by following the correct policy. Hence, we have to maximize ‘E’ for all possible values of S for a time. Where it is indicated by,

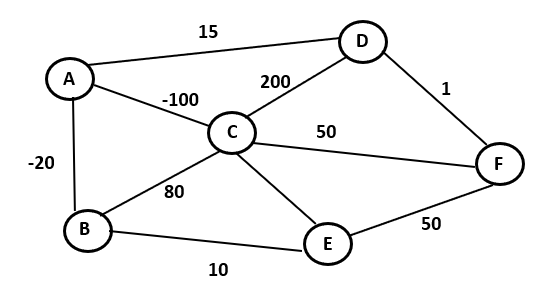

Shortest Path Problem:

Let’s take one example of the shortest path calculation. The task is to go from node A to node F, with the possible lowest cost. The numbers on each edge joining two nodes correspond to the cost taken to cross the distance. The negative value costs are considered as some earnings on the way. We define Value is the total cumulative reward when you create a policy.

Here,

The nodes represent a set of states, S= {A, B, C, D, E, F}

The action is going from one place to other, like {A ->C, E->F, ..,etc}

The reward function is nothing but the value representing the edge.

The policy represents the “path” to complete the task, for example {A ->D -> F}.

Let’s initialize the starting point as ‘A’. We have to find the shortest path from A to F. Now, suppose we are at place A. From here, the only observable path is the next destination and anything beyond these nodes is unknown at this stage. We can refer greedy approach and select the best possible next step, which is going from A to D from a subset of paths going from A to all possible nodes B, C, D. Similarly, now we are at node D and want to find a path to go to next node, so we can select a path from D to B, C, F. We see that path D to F holds the lowest cost; hence we take that path.

So, in this case, a policy was to take A -> D -> F and our Value is -70. This algorithm is called epsilon greedy. It is a greedy approach to solve problems like this. Now whenever we want to go from place A to place F, we would always choose the policy A -> D -> F.

Let’s take one example of the shortest path calculation. The task is to go from node A to node F, with the possible lowest cost. The numbers on each edge joining two nodes correspond to the cost taken to cross the distance. The negative value costs are considered as some earnings on the way. We define Value is the total cumulative reward when you create a policy.

Here,

The nodes represent a set of states, S= {A, B, C, D, E, F}

The action is going from one place to other, like {A ->C, E->F, ..,etc}

The reward function is nothing but the value representing the edge.

The policy represents the “path” to complete the task, for example {A ->D -> F}.

Let’s initialize the starting point as ‘A’. We have to find the shortest path from A to F. Now, suppose we are at place A. From here, the only observable path is the next destination and anything beyond these nodes is unknown at this stage. We can refer greedy approach and select the best possible next step, which is going from A to D from a subset of paths going from A to all possible nodes B, C, D. Similarly, now we are at node D and want to find a path to go to next node, so we can select a path from D to B, C, F. We see that path D to F holds the lowest cost; hence we take that path.

So, in this case, a policy was to take A -> D -> F and our Value is -70. This algorithm is called epsilon greedy. It is a greedy approach to solve problems like this. Now whenever we want to go from place A to place F, we would always choose the policy A -> D -> F.