Integrating Jupyter notebooks with Spark

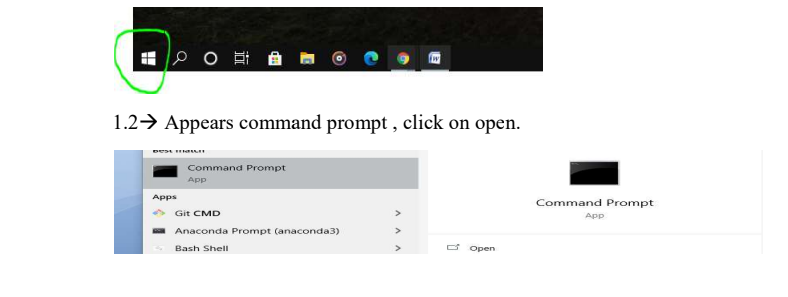

Apache Spark facilitates interactive and collaborative data analysis and exploration within a scalable and distributed computing framework. Jupyter notebooks provide an interactive environment where users can write and execute code, visualize data, and document their analysis in a single, user-friendly interface. On the other hand, Apache Spark is a powerful distributed computing engine designed for processing large-scale data sets across clusters of computers. By integrating Jupyter notebooks with Spark, users can leverage the capabilities of both platforms to perform data analysis, machine learning, and data processing tasks efficiently. Spark provides robust support for parallel processing and distributed computing, allowing users to handle large volumes of data and complex computations with ease. Meanwhile, Jupyter notebooks offer an intuitive interface for writing Spark code, visualizing results, and collaborating with others. Integration typically involves setting up a Spark cluster and configuring Jupyter notebooks to connect to the cluster using Spark's built-in support for Python (PySpark) or other programming languages such as Scala or R. This enables users to run Spark code directly within Jupyter notebooks, access Spark's distributed computing capabilities, and interactively explore and analyze data in real-time. 1.1Click on windows (or) Start symbol on task bar and type cmd.