Spark Streaming Kafka Tutorial - Spark Streaming with Kafka

Spark streaming Kafka tutorial, In this tutorial, one can easily know the information about Kafka setup which is available and are used by most of the Spark developers. Are you dreaming to become to certified Pro

Spark Developer, then stop just dreaming, get your

Apache Spark Scala certification course from India’s Leading

Apache Spark Scala Training institute.

Kafka setup for Spark Streaming

Step 1:Download Kafka from this link

https://www.apache.org/dyn/closer.cgipath=/kafka/0.11.0.0/kafka_2.11-0.11.0.0.tgz

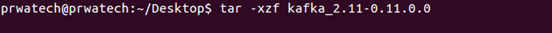

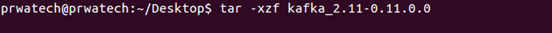

Step 2:Untar using this command

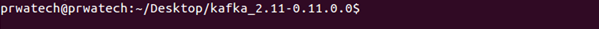

Step 3: Use the cd command to get into the directory

cd kafka_2.11-0.11.0.0

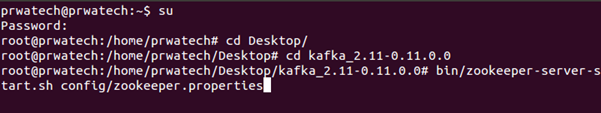

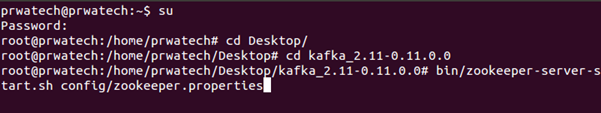

Step 4:Open a terminal and run

bin/zookeeper-server-start.shconfig/zookeeper.properties

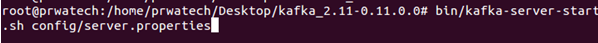

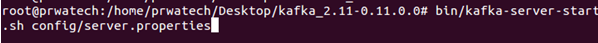

Step 5:Open another terminal and run

bin/Kafka-server-start.shconfig/server.properties

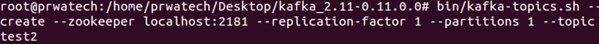

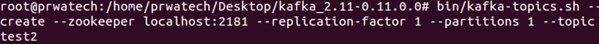

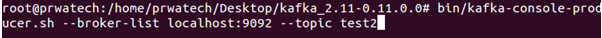

Step 6:Open another terminal and run

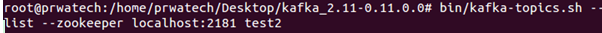

bin/Kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test2

bin/Kafka-topics.sh --list --zookeeper localhost:2181 test2

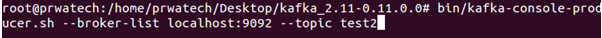

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test2

Hello World

Hello India

Hello World

Hello India

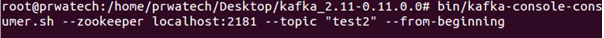

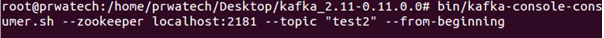

Step 7:Open another terminal and run

bin/Kafka-console-consumer.sh –bootstrap-server localhost:9092 --topic test2 –from-beginning

Whatever is typed in producer prompt, will be shown here

HelloWorld

HelloIndia

Interested in learning more? Become a certified expert in Apache Spark by getting enrolled from

Prwatech E-learning India’s leading advanced

Apache Spark training institute in Bangalore.

Spark streaming Kafka tutorial

Interested in learning more?

Interested in learning more? Become a certifiy expert in Apache Spark by getting enroll from

Prwatech E-learning India’s leading advanced

Apache Spark training institute in Bangalore.

bin/Kafka-topics.sh --list --zookeeper localhost:2181 test2

bin/Kafka-topics.sh --list --zookeeper localhost:2181 test2

Hello World

Hello India

Hello World

Hello India

Whatever is typed in producer prompt, will be shown here

HelloWorld

HelloIndia

Interested in learning more? Become a certified expert in Apache Spark by getting enrolled from Prwatech E-learning India’s leading advanced Apache Spark training institute in Bangalore.

Spark streaming Kafka tutorial

Interested in learning more?

Interested in learning more? Become a certifiy expert in Apache Spark by getting enroll from Prwatech E-learning India’s leading advanced Apache Spark training institute in Bangalore.

Whatever is typed in producer prompt, will be shown here

HelloWorld

HelloIndia

Interested in learning more? Become a certified expert in Apache Spark by getting enrolled from Prwatech E-learning India’s leading advanced Apache Spark training institute in Bangalore.

Spark streaming Kafka tutorial

Interested in learning more?

Interested in learning more? Become a certifiy expert in Apache Spark by getting enroll from Prwatech E-learning India’s leading advanced Apache Spark training institute in Bangalore.