Hadoop-Hive script with Oozie

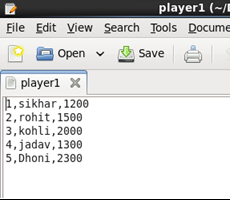

Integrating Hadoop, Hive, and Oozie enables the automation and orchestration of data processing workflows in a Hadoop ecosystem. Hadoop is a distributed computing framework for processing large datasets across clusters, while Hive provides a SQL-like interface for querying and analyzing data stored in Hadoop Distributed File System (HDFS). Oozie is a workflow scheduler system for managing and coordinating Hadoop jobs. Combining these technologies allows users to define complex data processing pipelines using Hive scripts within Oozie workflows. In this setup, Oozie serves as the workflow coordinator, orchestrating the execution of Hive scripts and other Hadoop jobs in a defined sequence or dependency. Create a dataset ♦ Start hive on terminal

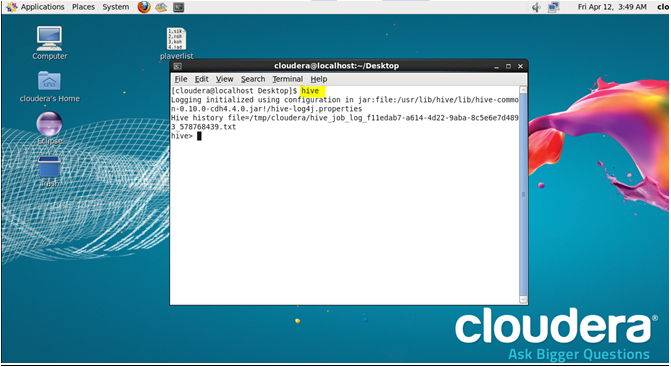

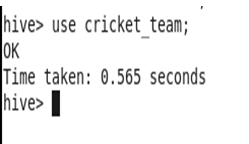

♦ Start hive on terminal

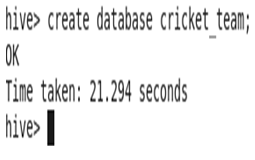

After open hive Create Database cricket_team Go to cricket_team and create table ind_player

After open hive Create Database cricket_team Go to cricket_team and create table ind_player

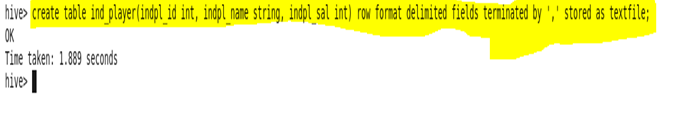

♦ Create table ind_player

♦ Create table ind_player

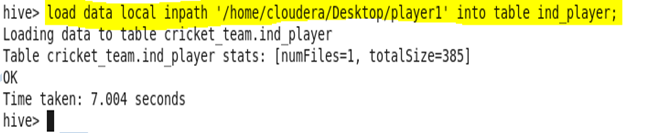

Load data into the table ind_player

Load data into the table ind_player

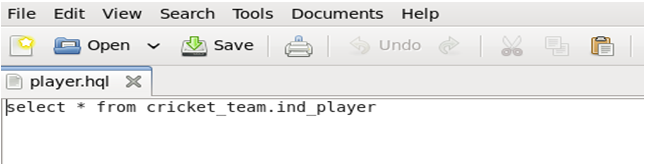

♦ Write a hive script and then save it as .hql extension

♦ Write a hive script and then save it as .hql extension

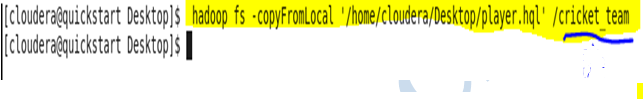

Save the .hql file in HDFS

Save the .hql file in HDFS

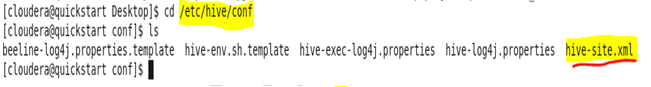

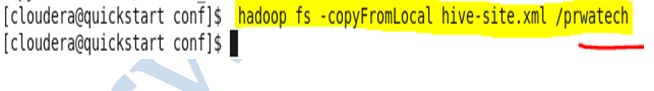

♦ Go to hive/conf and copy hive-site.xml in HDFS

♦ Go to hive/conf and copy hive-site.xml in HDFS

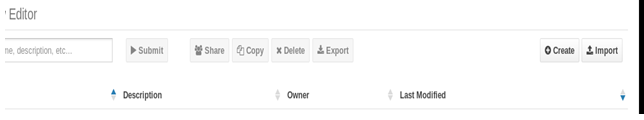

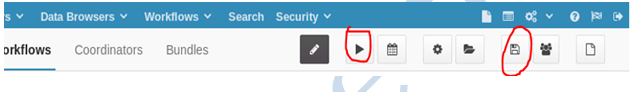

Go to oozie dashboard

Go to oozie dashboard

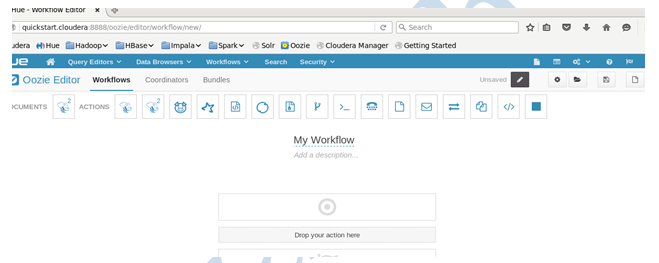

Go to Hue→ workflow→editors→workflow→create

Go to Hue→ workflow→editors→workflow→create

♦ Drag Hive in "Drop your action here

♦ Drag Hive in "Drop your action here

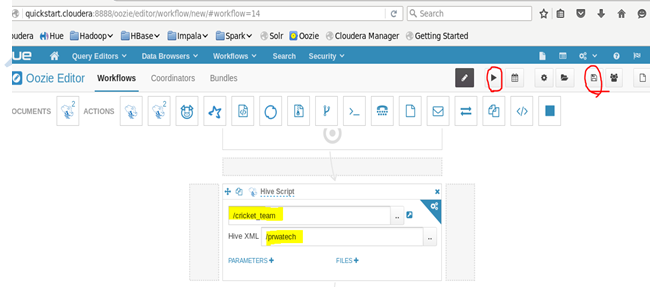

Put the Script file and Hive XML file path and click on save.

Put the Script file and Hive XML file path and click on save.

♦ Then submit

♦ Then submit

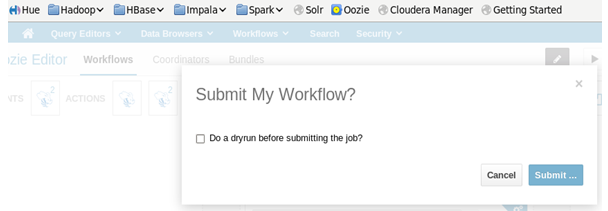

Save and Run

Save and Run

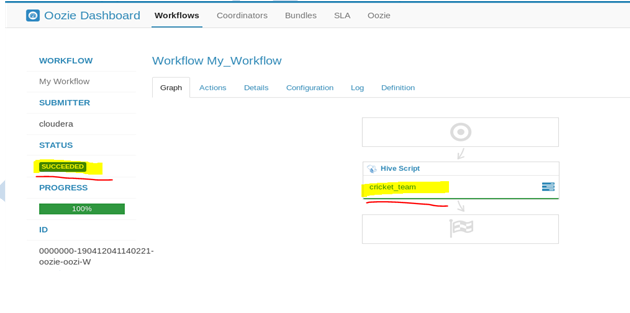

♦ After Successful run, you will get this type of screen

♦ After Successful run, you will get this type of screen