Apache Spark Basic RDD Commands

Apache Spark RDD Commands, Welcome to the world of best RDD commands used in Apache Spark, In This tutorial, one can easily learn a List of all Top Rated Apache Spark basic RDD commands which are available and are used by most of the Spark developers. Are you also dreaming to become to certified Pro Developer, then stop just dreaming get your Apache Spark certification course from India’s Leading Apache Spark Training institute. So follow the below mentioned used from Prwatech and learn Apache Spark Course like a pro from today itself under 15+ Years of Hands-on Experienced Professionals.Basic used in Apache Spark

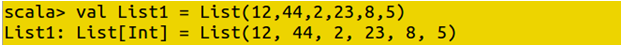

Creating a new list with a value

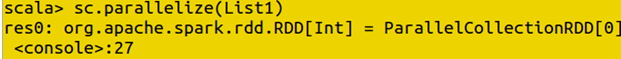

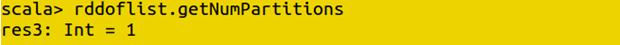

Parallelize the data set. It will provide the set of two RDD (default partition is 2)

Creating new RDD for the list

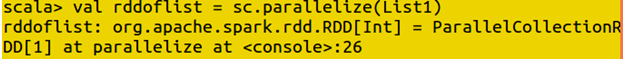

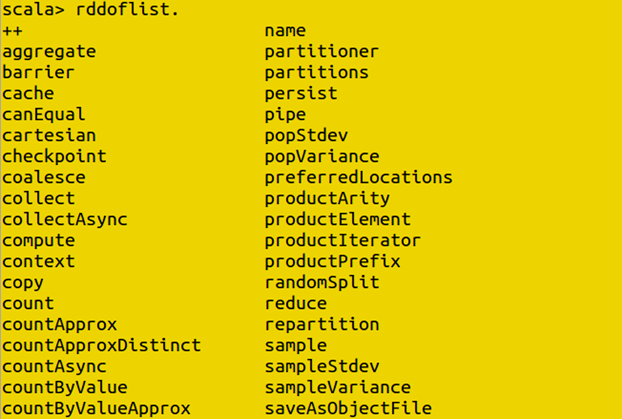

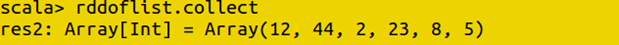

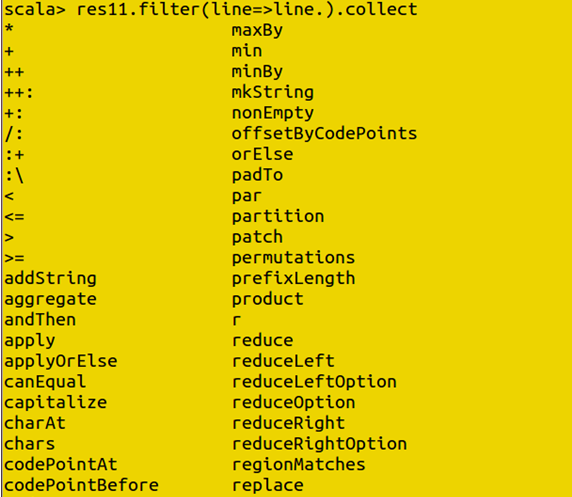

RDD functions

To check total RDD

Creating new RDD with output

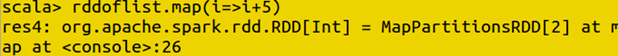

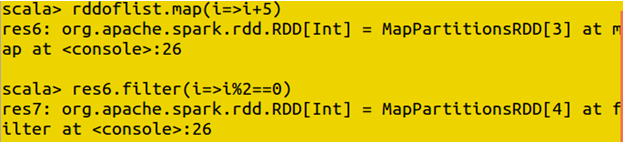

Creating new RDD using map RDD

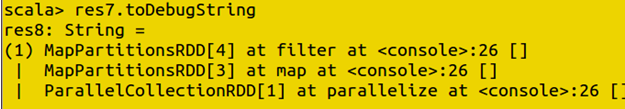

To check the function of RDD

Transformation

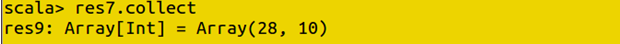

Count operation: Used to count total elements in the array

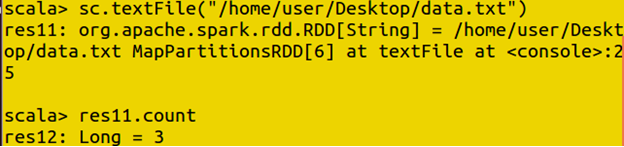

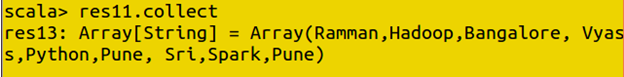

Read the file from the source

Display file

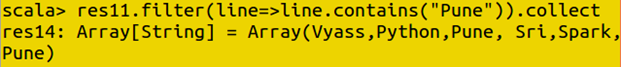

Using keyword to separate data

To find the length of the array

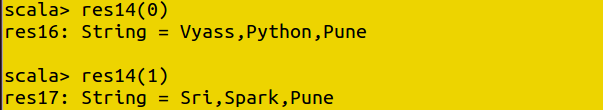

To check value using index

Line operations

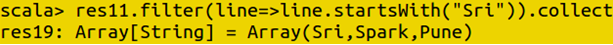

Starting keyword

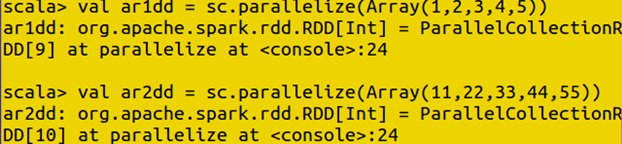

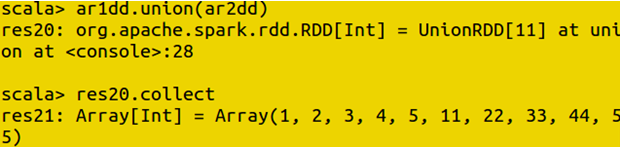

Creating two arrays for the union

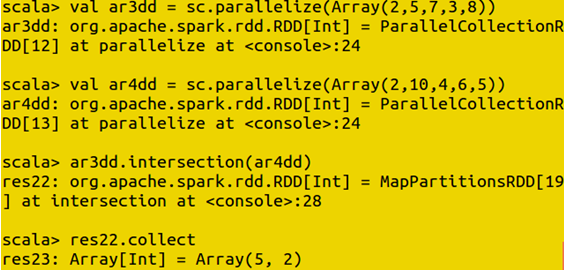

Intersection

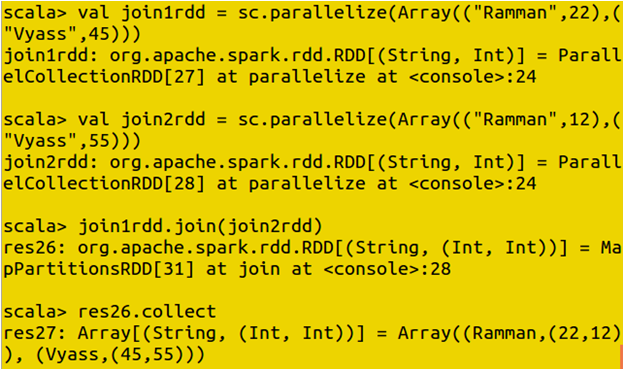

Join function

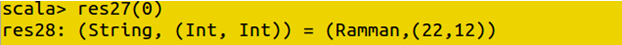

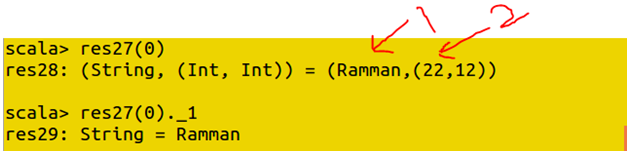

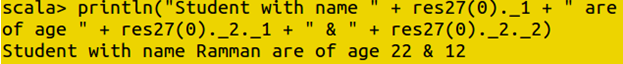

Using the index to get value

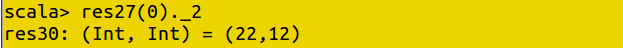

Using values to print in a proper format

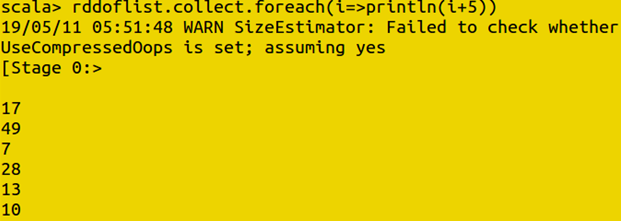

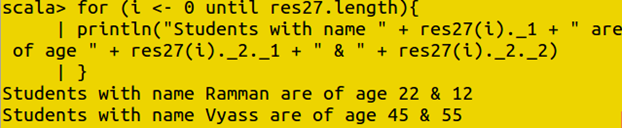

Print multiple values using for loop

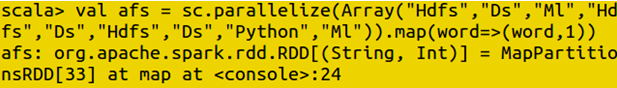

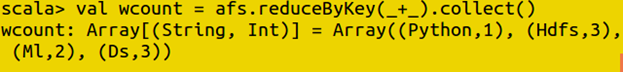

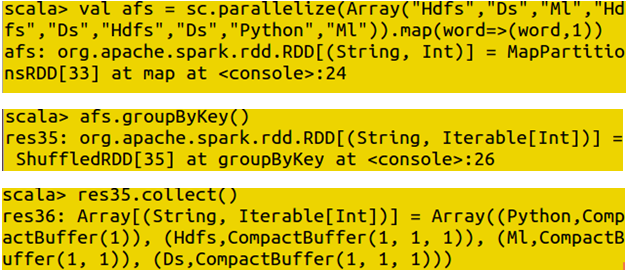

Using MapReduce in RDD : (word count)

Group By Key

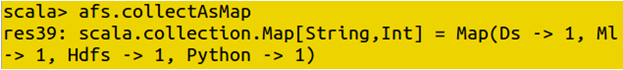

Map value

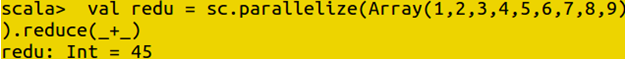

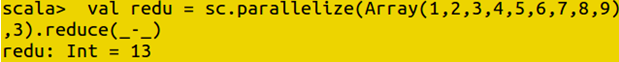

Aggregation of integer value

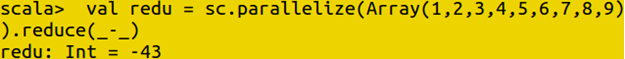

Add : Subtract :

Subtract :

Changing the parallelism :

Changing the parallelism :

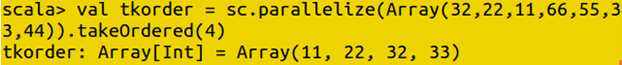

Take order: Does the order selection on the basis of the given array and value

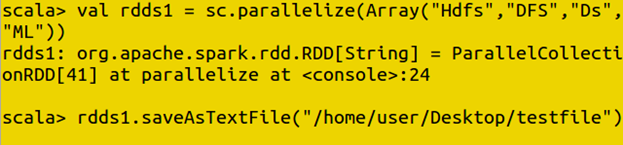

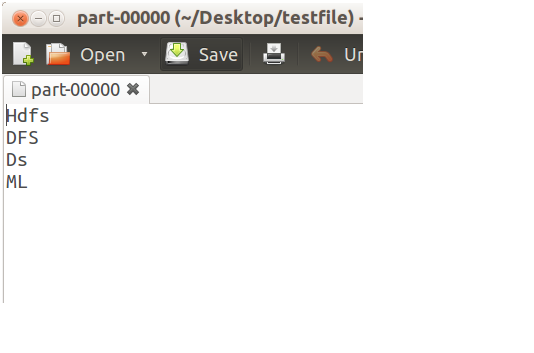

Save file

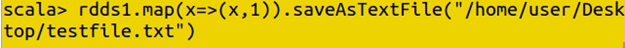

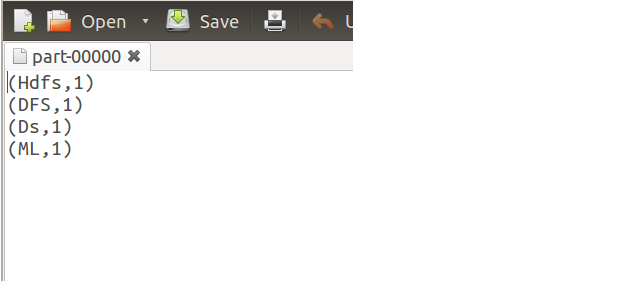

Saving the file in map format

Thanks for Reading us, if you are also the one who is keen to learn the technology like a pro from scratch to advanced level, the Ask your World-class Trainers of India's Leading Apache Spark Training institute now and get Benefits of Apache Spark course from Prwatech.

Thanks for Reading us, if you are also the one who is keen to learn the technology like a pro from scratch to advanced level, the Ask your World-class Trainers of India's Leading Apache Spark Training institute now and get Benefits of Apache Spark course from Prwatech.