Create and Configure Dataproc Clusters on GCP

Dataproc is a managed Spark and Hadoop service which lets you take the advantage of open source data tools for batch processing, querying, streaming, and machine learning.

Prerequisites

GCP account

Open Console

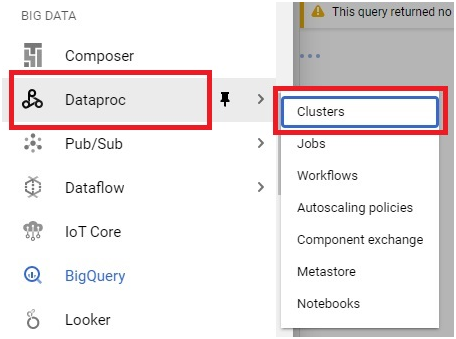

Open Menu > Dataproc > Clusters

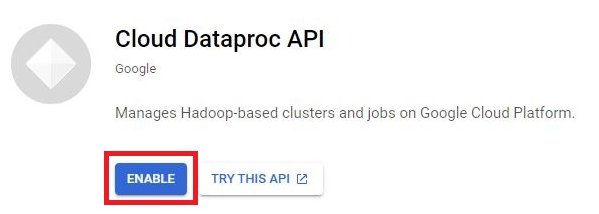

Click Enable to enable Dataproc API.

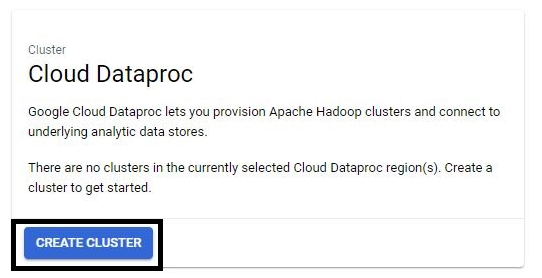

Click on Create cluster

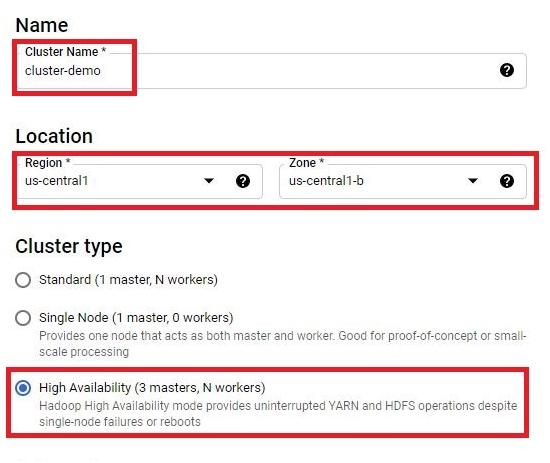

Give the name for cluster.

Choose the Location and Zone.

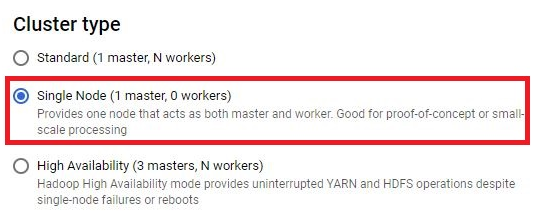

Select the Cluster type as high availability

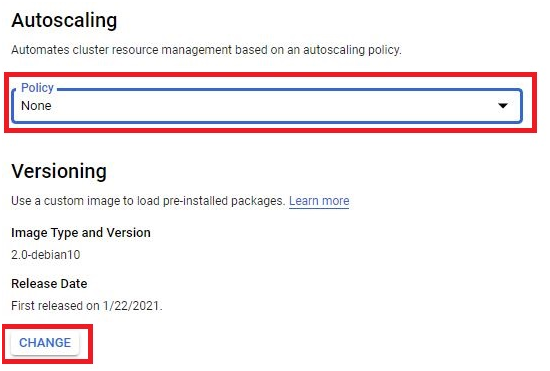

If you have any autoscaling policy, select that policy otherwise None.

Click on Change to change the OS.

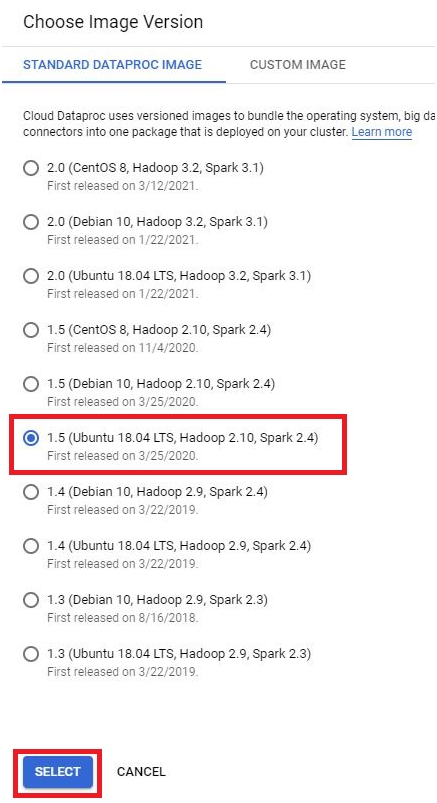

Select the OS which you want. Then press select.

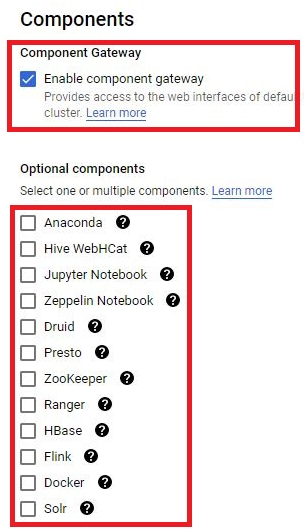

In components, Tick Enable components gateway. If you need any optional Components, Tick that also.

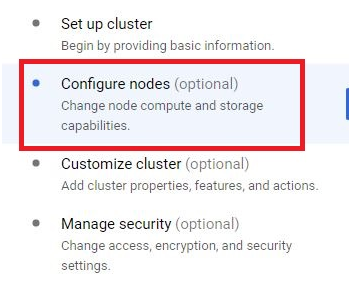

Click on Configure Nodes.

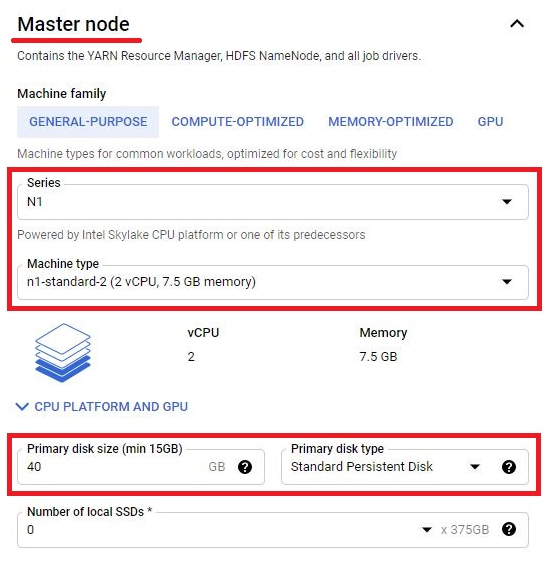

In master node, choose the Specification you want.

Choose the disk size and type for master node.

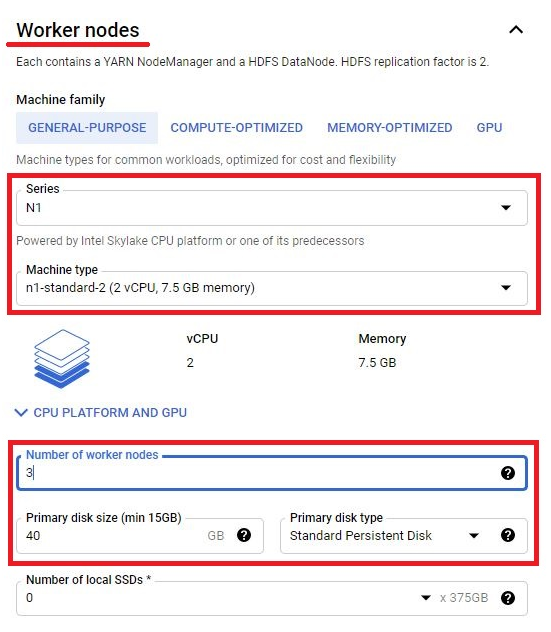

In Worker node Choose the specification.

Choose the no of worker nodes for cluster.

Choose the disk size and type.

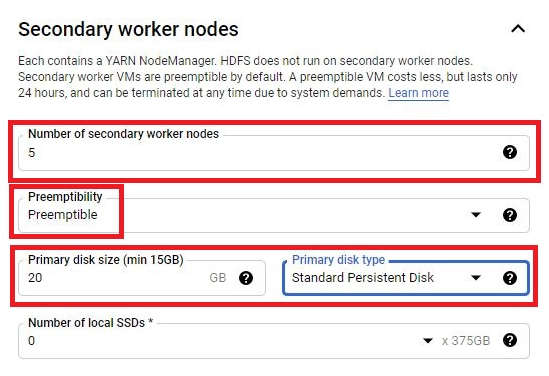

If you need Secondary worker nodes Choose the number of Secondary worker nodes.

Choose the preemptibility.

Choose the disk size and type.

NB : If you are using free account, Cluster won’t get created. So in that time choose Single node(1 master)

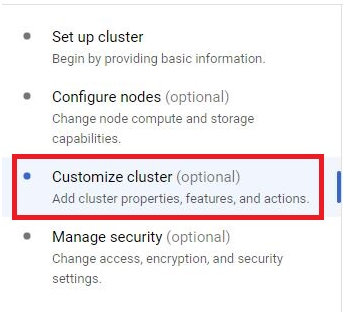

Select the Customize cluster.

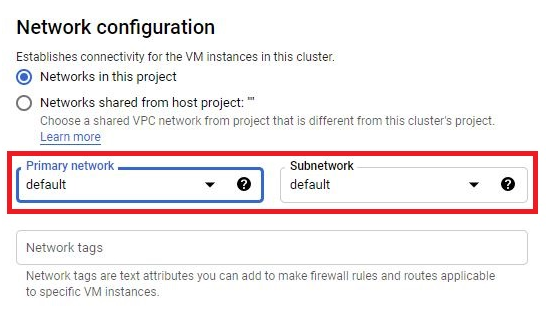

Choose the network for cluster. If you have VPC network, you can choose it. Or choose default.

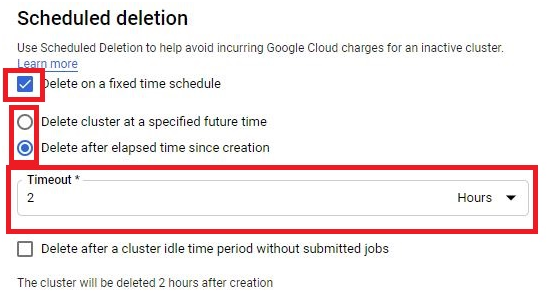

In scheduled deletion you can give the scheduled timeout deletion of cluster.

Specify the time out.

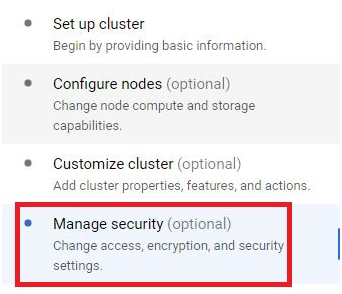

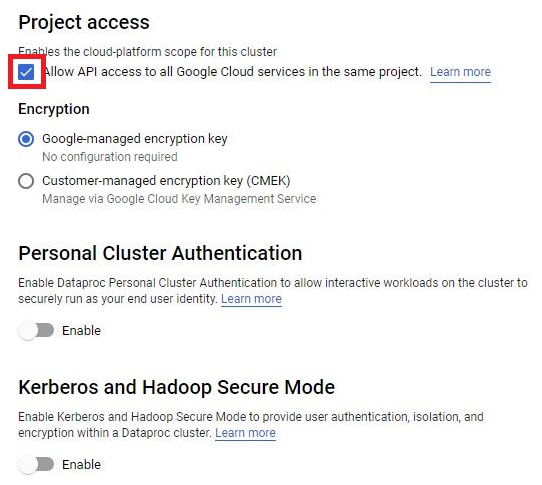

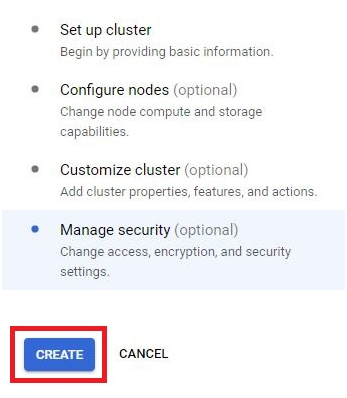

Select Manage Security

Tick Allow API access.

Click on create.

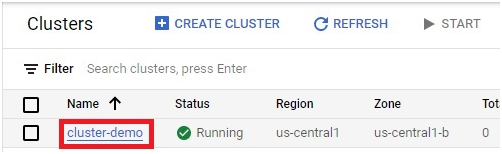

It will take little bit time. The Cluster will be created.

Click on Cluster.

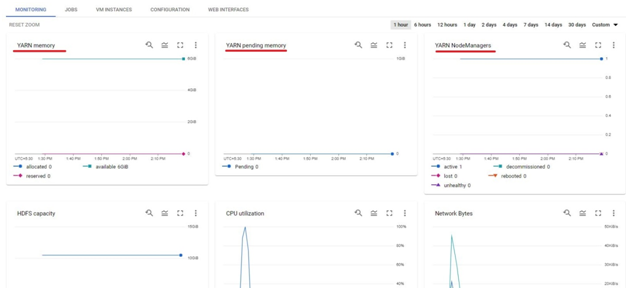

You can see the YARN Monitoring of cluster.

Create and Configure Dataproc Clusters on GCP