Benefits and limitations of Tensor Processing Units (TPUs)

One significant benefit of TPUs is their unparalleled speed and efficiency in processing large-scale machine learning tasks. TPUs excel at performing matrix multiplications and other tensor operations commonly found in neural network computations, delivering significantly faster training times and lower power consumption compared to CPUs and GPUs.

Moreover, TPUs are highly scalable, allowing organizations to scale their machine learning workloads horizontally by connecting multiple TPUs in a distributed fashion. This scalability enables training of massive models and handling of large datasets with ease, making TPUs ideal for tackling complex AI challenges.

However, TPUs also have limitations. One limitation is their specialized design, which makes them optimized primarily for deep learning tasks. While TPUs excel at certain types of computations, they may not offer the same level of versatility as CPUs or GPUs for general-purpose computing tasks.

Prerequisites

Hardware : GCP

Google account

It is Google’s custom-developed application-specific integrated circuits which is used to accelerate machine learning workloads. Cloud TPU enables you to run your machine learning workloads on Google’s TPU accelerator hardware using Tensor Flow.

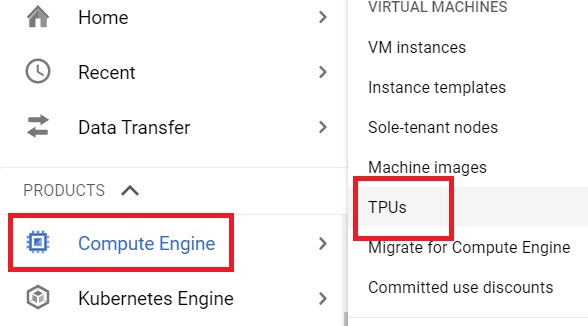

Open Menu > Compute Engine > TPUs

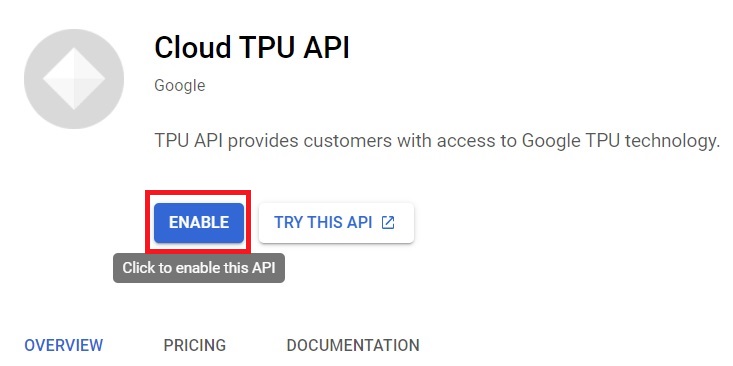

If the TPU API is not enables, then Click Enable.

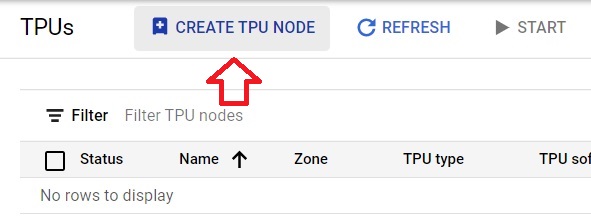

Click on Create TPU node.

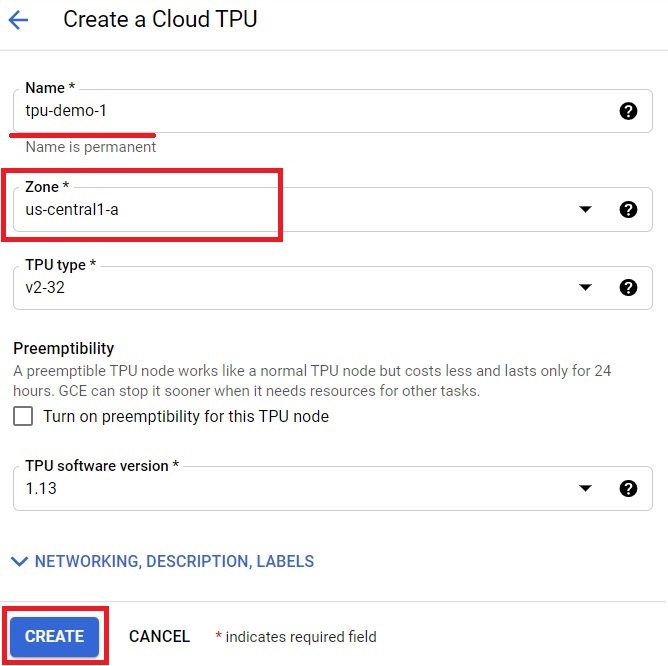

Give TPU name. Select the Zone and TPU type. TPU software version you can choose accordingly. Click Create.

Benefits and limitations of Tensor Processing Units