Apache Spark SQL Commands

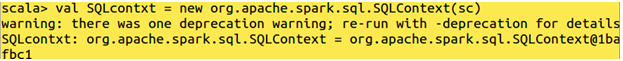

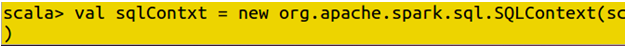

Apache Spark SQL Commands, welcome to the world of Apache Spark Basic SQL commands. Are you the one who is looking forward to knowing the Apache Spark SQL commands List which comes under Apache Spark? Or the one who is very keen to explore the list of all the SQL commands in Apache Spark with examples that are available? Then you’ve landed on the Right path which provides the standard and Basic Apache Spark SQL Commands. If you are the one who is keen to learn the technology then learn the advanced certification course from the best Apache Spark training institute who can help guide you about the course from the 0 Level to Advanced level. So don’t just dream to become the certified Pro Developer Achieve it by choosing the best World classes Apache Spark Training Institute in Bangalore which consists of World-class Trainers. We, Prwatech listed some of the Top Apache Spark SQL Commands which Every Spark Developer should know about. So follow the Below Mentioned Apache Spark Basic SQL Commands and Learn the Advanced Apache Spark course from the best Spark Trainer like a Pro. Spark context(sc) : To initialize the functionalities of Spark SQL To create a spark context

Check the context created

Data Frame

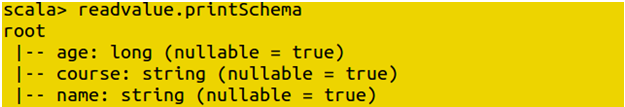

Schema of a table: Using the below-mentioned command we find out the schema of Data set

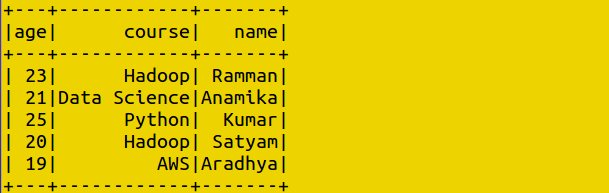

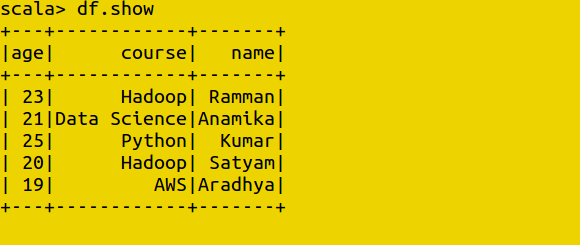

Show data: Using below-mentioned command we display the values inside our data set

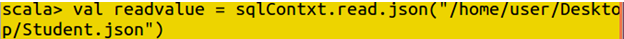

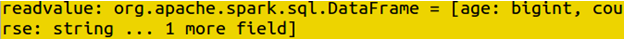

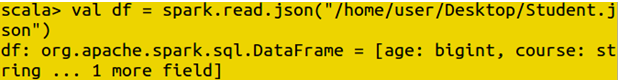

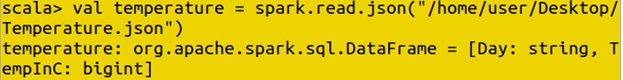

Reading files using spark session: Using the below-mentioned command we can read data from an external source by providing their path of existence.

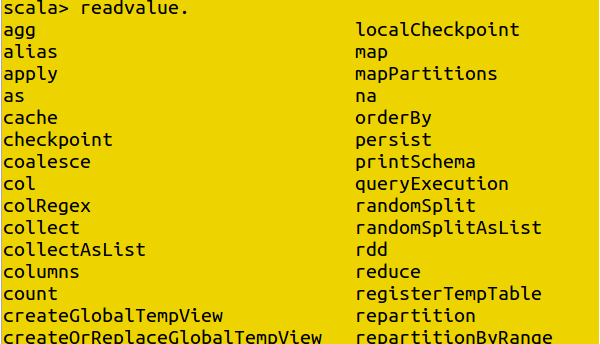

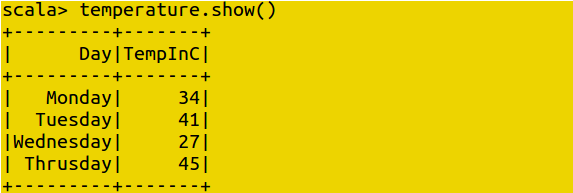

Show value: To Display the data

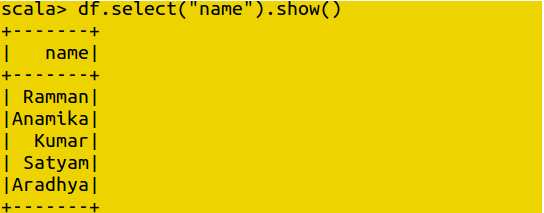

Selecting a particular column: Using below-mentioned command we can display all the data from a particular column

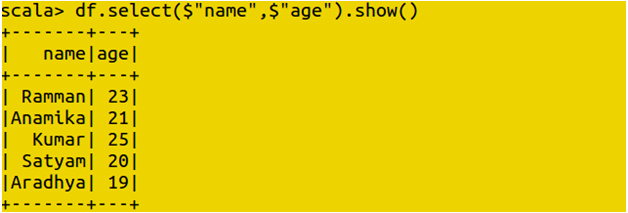

Selecting more than one column: Using below-mentioned command we can display all the data from two selected column

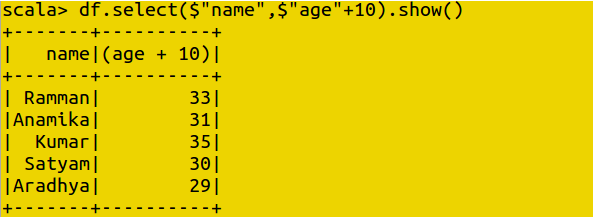

Incrementing the value of column: Using below-mentioned command we can increment the data with the given value

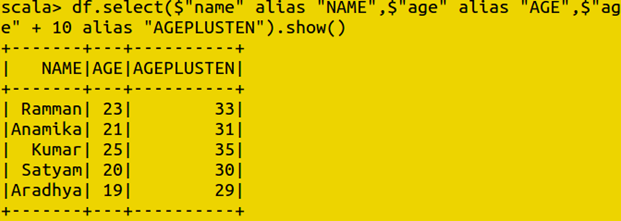

Alias: Using the below-mentioned command we can display columns as other names.

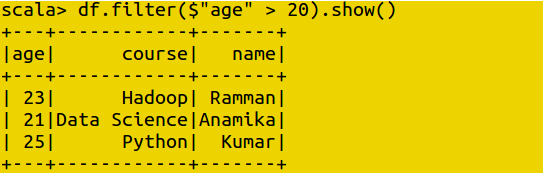

Filter: Using below-mentioned command we can filter out the value from using different parameters

Data frames are also transformational in nature and they are immutable

Data frames are also transformational in nature and they are immutable

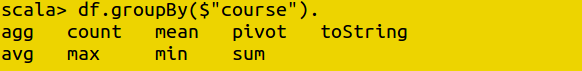

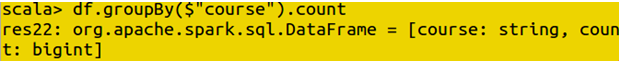

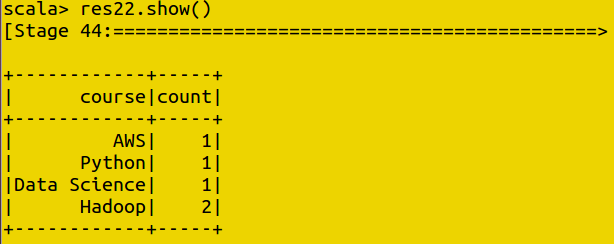

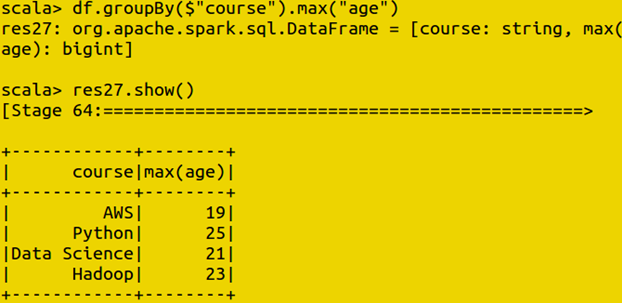

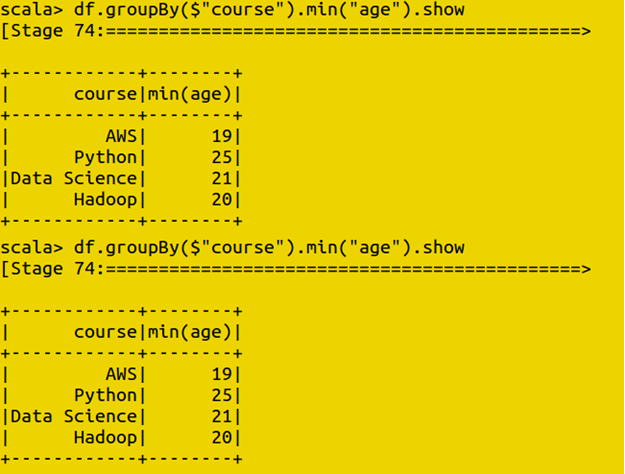

Group by

Various Functions in Group By

Count: Using this Group by function to count the given data set.

Max: Using this Group by function to find out the maximum values for given datasets.

Min: Using this Group by function to find out the minimum values for given datasets.

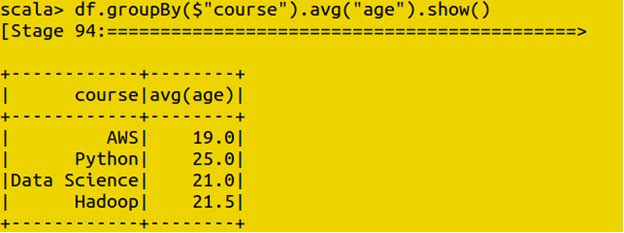

Average: Using this Group by function to find out the average values for given datasets.

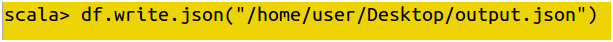

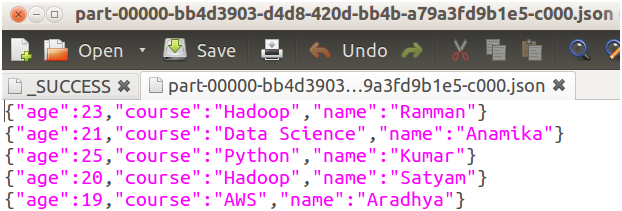

Write: Using the below-mentioned command we can write and store data on the mentioned location as per user requirement.

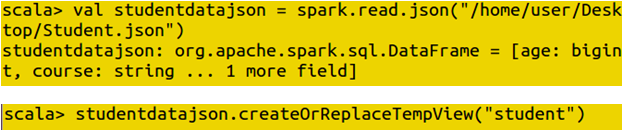

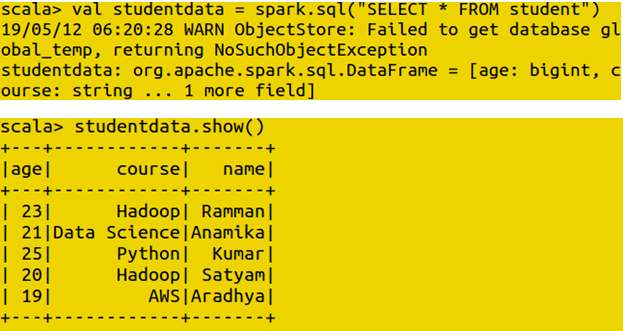

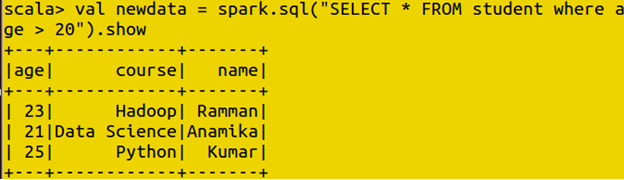

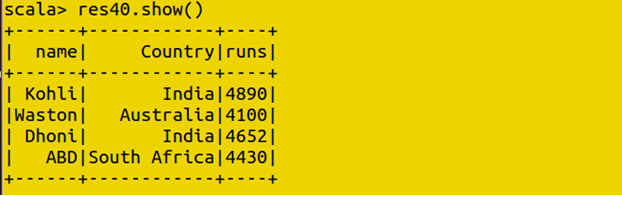

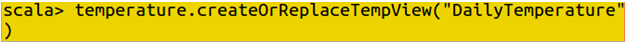

To perform query we create a template view

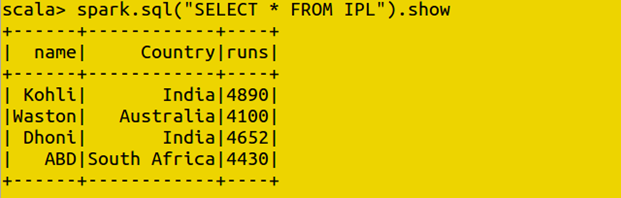

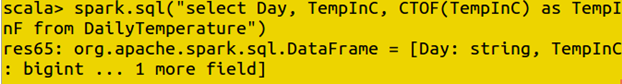

Now we can use command per operations using SQL queries

Now we can use command per operations using SQL queries

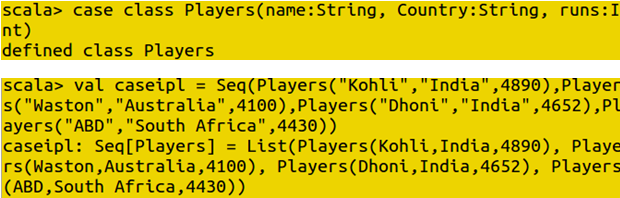

Data Set

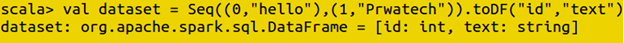

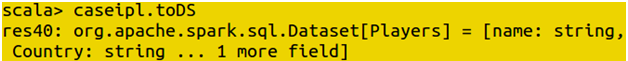

Create dataset: Dataset is only created using (seq) objectData set with metadata info

Now we can perform SQL query

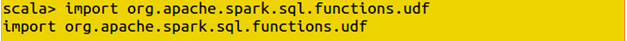

UDF Functions

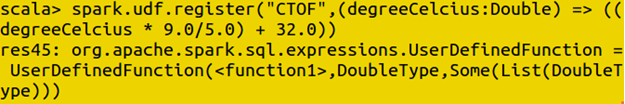

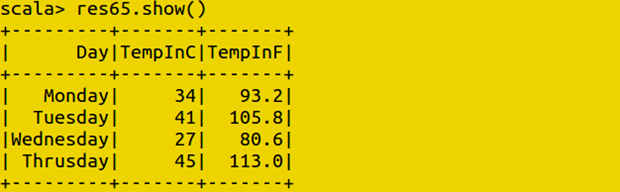

UDF allows us to register custom functions to call within SQL. These are a very popular way to expose advanced functionality to SQL users in an organization so that these users can call into it without writing codeTest Case 1: Converting Celsius into Fahrenheit

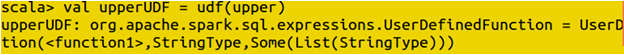

Register UDF: Using the below-mentioned command we are registering new functions as per user need.

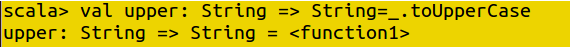

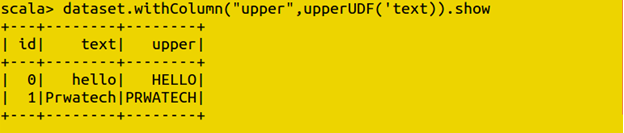

Test case 2: Lower to Upper case