What is Hadoop 3.0?

What is Hadoop 3.0, Welcome to the world of the best Hadoop 3.0 tutorial. In This tutorial, one can easily know the information about what is Hadoop 3.0, what is new in Hadoop 3.0 and what are the new features of Hadoop 3.0 which are available and are used by most of the Hadoop developers. Are you also dreaming to become to certified Pro Developer, then stop just dreaming get your Hadoop certification course from India’s Leading Hadoop Training institute.

So follow the below mentioned Hadoop 3.0 tutorial from Prwatech and learn Hadoop Course like a pro from today itself under 15+ Years of Hands-on Experienced Professionals.

What is Hadoop 3.0?

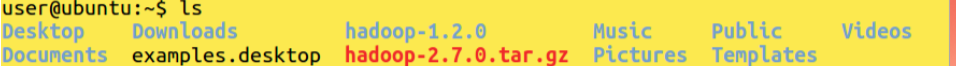

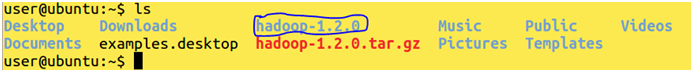

Apache Hadoop community has released a new release of Hadoop that is called Hadoop3.0. Through this version, the feedback can be provided of downstream applications and end-users and the platform to check it. This feature can be incorporated into the alpha and beta processes. Thousands of new fixes and improvements have been incorporated in this new release in comparison to previous minor release 2.7.0. That was released a year before. This blog will provide you with information about the new release of Hadoop and its features.

A number of significant enhancements are being incorporated in the new Hadoop version. The features are listed below and they have been proven much more advantageous to the Hadoop user. Apache site has full information about these new changes and enhancements that are being done in the new version. You can refer the site to get the look of those changes and here an overview is provided for all those changes that are being offered to the Hadoop users.

New Features of Hadoop 3.0

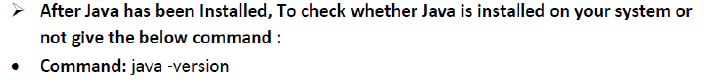

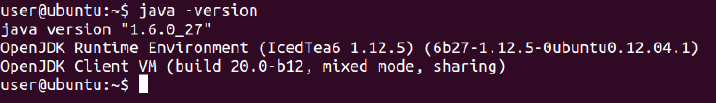

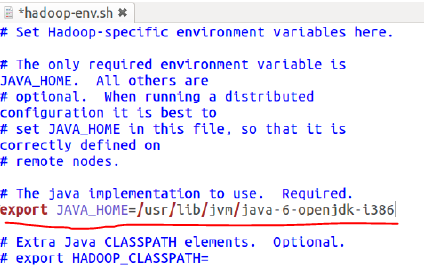

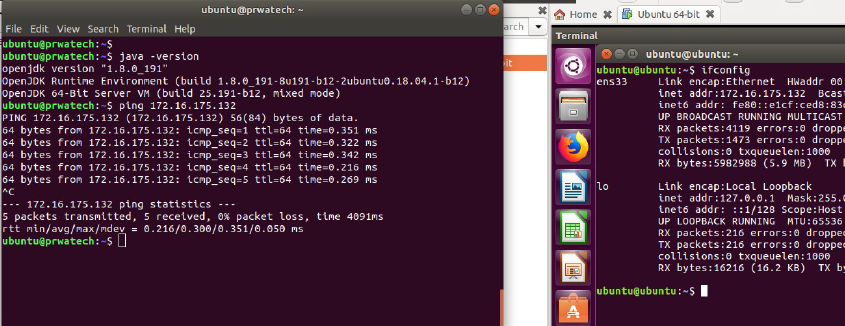

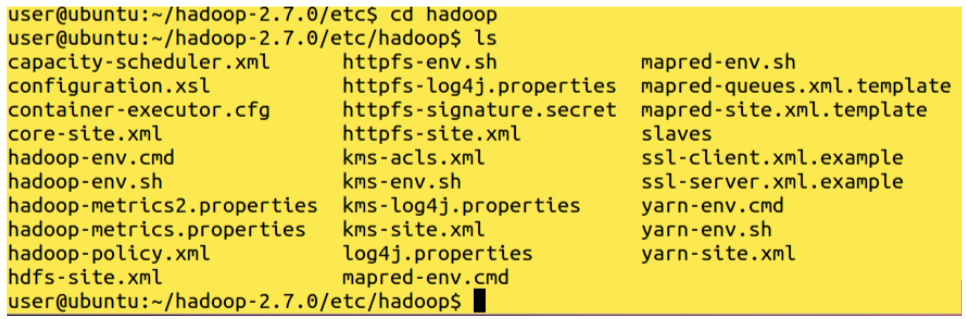

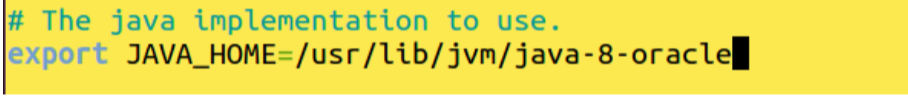

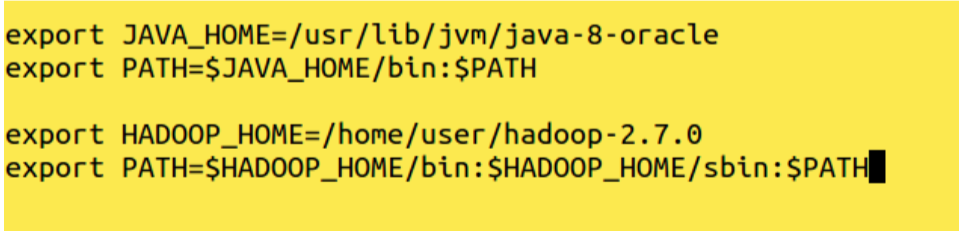

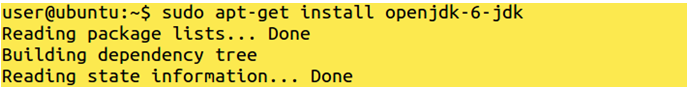

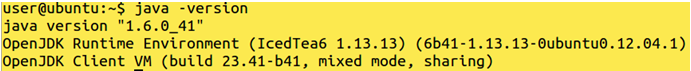

Supports Java 8.

Supports HDFS Erasure Code.

Supports more than 2 NameNodes:- This architecture is able to tolerate the failure of any one node in the system.

Support for Microsoft Azure Data Lake:- Hadoop now supports integration with Microsoft Azure Data Lake.

Scalability:- YARN Timeline Service v.2 chooses Apache HBase as the primary backing storage.

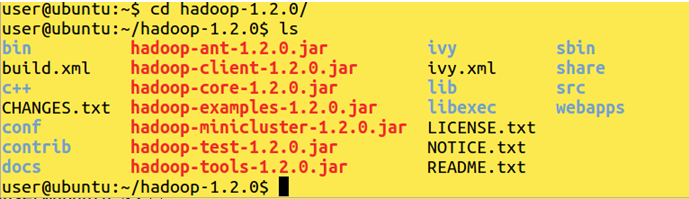

Shaded client jars:- New Hadoop-client-apiand Hadoop-client-runtime artifacts that shade Hadoop’s dependencies into a single jar. This avoids leaking Hadoop’s dependencies onto the application’s classpath.

MapReduce task:- level native optimization:- MapReduce has added support for a native implementation of the map output collector. For shuffle-intensive jobs, this can lead to a performance improvement of 30% or more.

Support for Erasure Coding in HDFS:- Considering the rapid growth trends in data and datacentre hardware, support for erasure coding in Hadoop 3.0 is an important feature in years to come. Erasure Coding is a 50 years old technique that lets any random piece of data be recovered based on other pieces of data i.e. metadata stored around it. Erasure Coding is more like an advanced RAID technique that recovers data automatically when a hard disk fails.

Support for Opportunistic Containers and Distributed Scheduling:- A notion of ExecutionType has been introduced, whereby Applications can now request for containers with an execution type of Opportunistic. Containers of this type can be dispatched for execution at an NM even if there are no resources available at the moment of scheduling.

Opportunistic containers are by default allocated by the central RM, but support has also been added to allow opportunistic containers to be allocated by a distributed scheduler which is implemented as an AMRMProtocol interceptor.

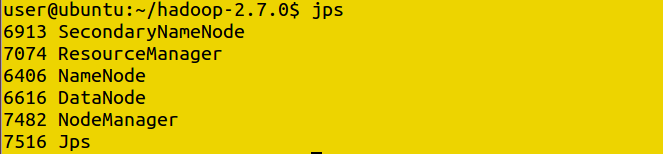

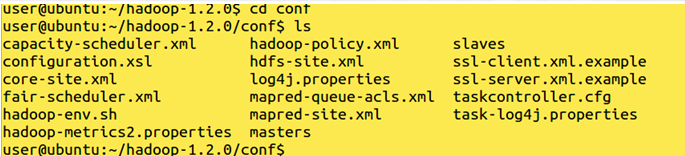

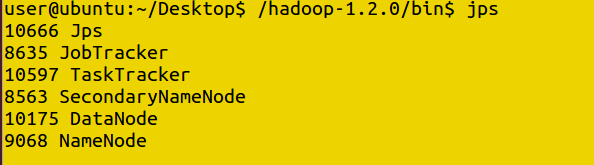

Default port number changes

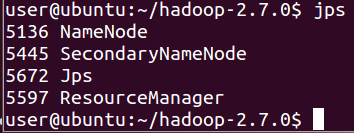

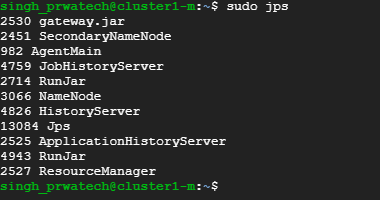

NameNode :– 9870

ResourceManager :– 8088

MapReduce JobHistory server:– 19888

In this Hadoop 3.0 tutorial, we have covered concepts of what is Hadoop 3.0, what is new in the Hadoop 3.0 version and what are the new features of the Hadoop 3.0 version. Take your Hadoop interest to the next level. Become a certified expert in Hadoop technology by getting enrolled from Prwatech E-learning India’s leading advanced Hadoop training institute in Bangalore. Register Now for more updates on Hadoop 3.0 technology. Our expert trainers will help you towards mastering real-world skills in relation to these Hadoop technologies.