Analyze massive datasets with BigQuery

Open Console.

Open Menu > Big Query > SQL Workspace.

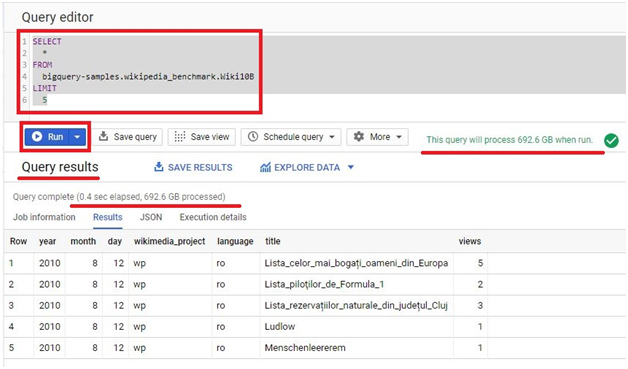

In query editor. Paste the below code.

SELECT

*

FROM

bigquery-samples.wikipedia_benchmark.Wiki10B

LIMIT

5

And click run.

Within seconds we will get the output. This query processed 692 GB in less than a second.

NB : We are accessing public dataset provided by google. Processing speed depends on networks.

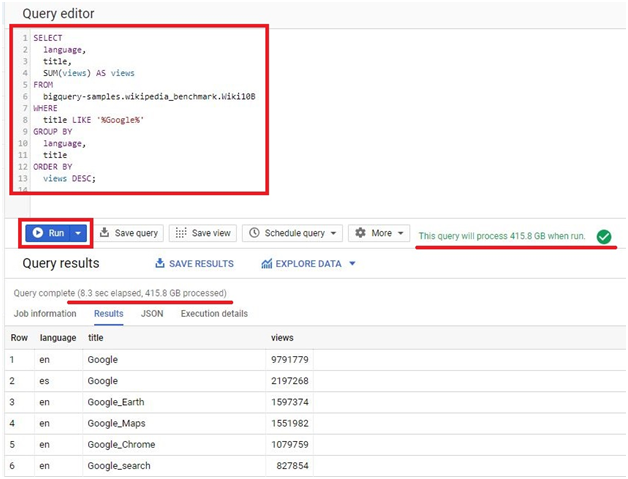

Paste the code into query.

SELECT

language,

title,

SUM(views) AS views

FROM

bigquery-samples.wikipedia_benchmark.Wiki10B

WHERE

title LIKE ‘%Google%’

GROUP BY

language,

title

ORDER BY

views DESC;

then press Run.

It will execute 425 GB of data within 8.3 seconds.

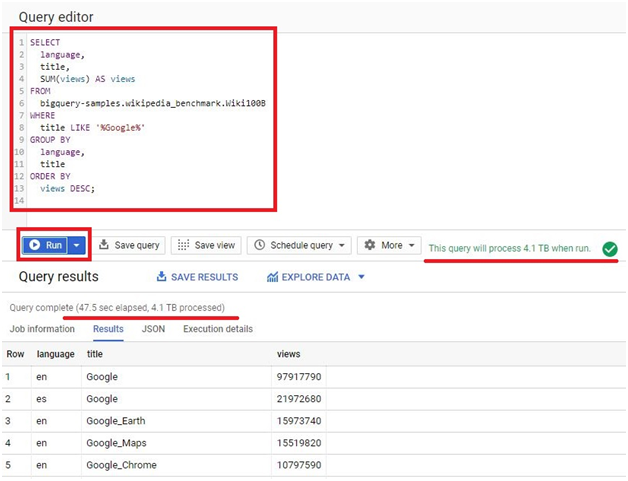

Paste the below code in query

SELECT

language,

title,

SUM(views) AS views

FROM

‘bigquery-samples.wikipedia_benchmark.Wiki100B’

WHERE

title LIKE ‘%Google%’

GROUP BY

language’

title

ORDER BY

views DESC;

click Run

It will execute 4.1 TB of data in 47.5 seconds.